I really stumbled on some stuff, and I think I have traction on a couple of problems.

1. My sizes are absolutely perfect. For me, this is a big milestone. This tells me alot. I’m getting perfect distances between mostly perfect sector headers. This tells me that I’m really not dropping even a single byte across a whole track. Or, if I am, it gets made up — which really wouldn’t happen perfect 1:1 ratio.

2. I’m getting repeatable results, even if they aren’t 100%. This means there isn’t any sort of flaky intermittent non-deterministic results.

3. On my current ‘problem’ track, I’m getting 7 of 11 good sectors. Sectors 6,7 and 9,10 are failing a data checksum.

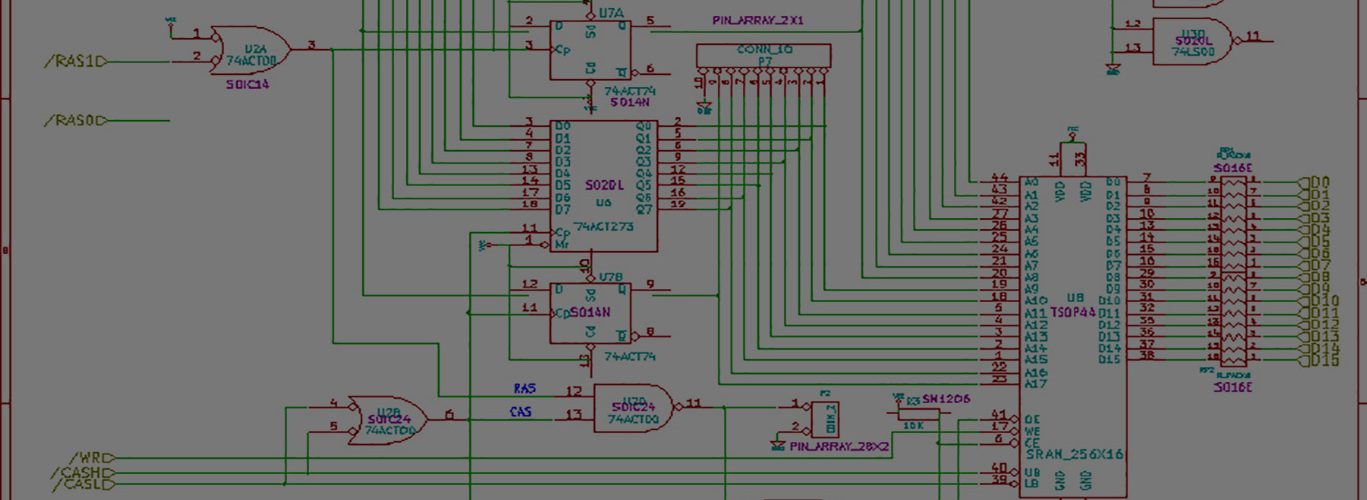

4. My original read routines, which have always appeared solid, are performing with the ram perfectly. And much much faster. Something like 580ns total for the whole ISR including a write to the ram chip. Im very happy with the speed/length of code. Very clean, easy, and nice.

5. When comparing the decoded bad read sector with the actual contents of the sector, they match identically in content, BUT read data is shifted one byte to the right consistently. So even “bad” sectors are only “bad” in that my decode routines aren’t checking the checksum properly. The routines I’m currently using is Marco Veneri’s ported code. If you recall from this post

https://www.techtravels.org/?p=48#comment-69

I think I’m running into similar problems, but pouring over his code hasn’t produced anything interesting. What’s wierd is that it does sometimes work, and sometimes doesnt when it comes to the checksum. You say, how can it work if the data is consistently shifted? It just so happens that the values I lose at the beginning (normally a 0x00 for whatever, probably a file-system os character) is the same as the character at the end after a data segment. So the checksum comes out right, but like I said, in certain cases only.

It’s refreshing to see the bad sectors aren’t really bad and that its a SOFTWARE problem on the PC. mainly because it does heavy bit-level math using tricks to combine data, etc I’d like to rewrite it, but I dont have a grasp YET on how to do that. I think that floppy.c code that I mention here

https://www.techtravels.org/?p=34

works well, is easy to read, etc. But I havent looked yet to see if they even check the checksum. I see where they decode data and thats nice BUT they just ignore the checksum? Like I said, though, I havent checked to be sure if they do it elsewhere. I’d really like to write my own code, so that I can truly understand how it works. Fixing other people’s code that I dont truly understand or porting it is a pain in the butt, although lord knows Ive done plenty of it.

If the data contained in the bad sector is 100%, which it is, that means that my SX code is working perfectly. I checked the stuff byte for byte on the mfm decoded side.

The main problem this whole time has been the PC not being fast enough, which Ive resolved through USB and FRAM. Tommorow’s task will be narrow down the problem in the PC software or figure out how the checksum works, and write the darn routines myself.

Add comment