- DTR-based PC to SX flow control is now enabled and working, thanks to jd2xx. It has been tested, and SX responds appropriately to a STOP request. Strangely, with the new jd2xx code, it doesn’t appear that flow control is even needed now, where it was CLEARLY needed with rxtx.

- The heat problems I was worried about was no big deal. The slow boat from China arrived with my IR thermometer. My SX goes from 74 degrees F to 100F when idling, and 118F or so when operating. I’m going to continue to monitor it, but Parallax tech support says 120-130 is perfectly normal. Chip’s max is +185F.

- Performance update: From start to finish on the PC side, my java code is reporting 141 ms per track to transfer it. My logic analyzer says 122 ms or so, but I’ve found the reason for the gap. As mentioned in earlier posts, my USB2SER device uses a USB transfer size of 4096 bytes, or 3968 after 128 bytes of overhead(2 status bytes per 64 data). It has to transfer 3*3968 byte blocks + 1*3334 byte block for a total of 15238 bytes. The last block is subject to a latency timer of 16ms (adjustable to 1ms), where the USB2SER is waiting for another 634 bytes before xmitting to the PC. Sooooo you get 122 + 16 + 3ms scheduling delay and voilà, you are at 141ms. Maybe it makes sense to just pad out to the full buffer. (Not to be rhetorical, but it does, 634*8us per byte (see earlier posts) = 5ms instead of 16. I’d have to get fairly precise, don’t want to send 1 extra character. I could also just drop the latency timer value.

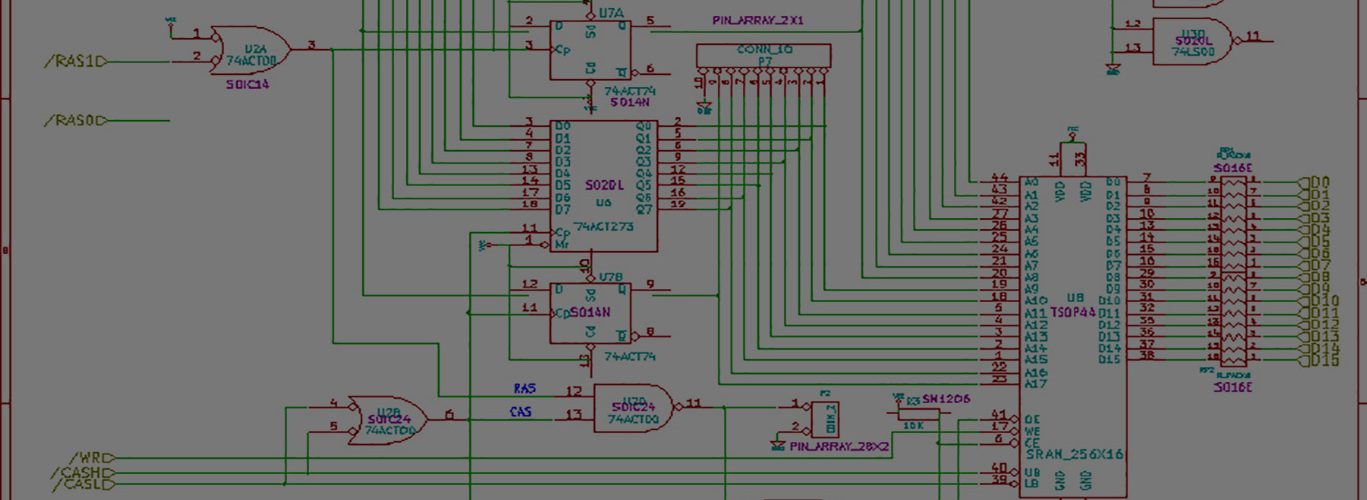

- I posted a schematic (now slightly outdated, already) here. Also added it on the right hand side blog menu.

Things are progressing nicely now that I’ve put the required time into it. I have a something on the way that is going to consume a lot of my time in the future, so I’m trying to bang this out.

That extra 16ms from the latency timer on the last block has been eliminated. I created an “event character” of 0xFE which causes the USB2SER to immediately forward the buffer to the PC. 0xFE doesn’t happen during normal user data, but would make little difference if it actually did….

I’m getting times of around 124 or 125ms which is almost exactly what I’m seeing on the logic analyzer of 122-ish times. This is very good. This means that little-to-no delay is being added by my Java code. The data arrives at my application almost immediately.

I occasionally am getting larger delays. 140, 172, 187 seem to be the outliers — but still crop up. I want to investigate why this happens, but I’m pretty sure it’s related to USB transfer sizes etc. I’ll have to do a whole disk and figure out an average to make sure those outliers are just exceptions and don’t affect the overall transmission speeds.

I did some numbers today for performance, I can squeeze another ~72ms/track out of the system by:

1> Instead of reading 15232, read the absolute minimum 13055(worst case: 11*1088+1087). This lessens the time spent reading the physical disk. This saves ~36ms/track.

2> Combine the reading of memory and transmitting. I looked into this tonight, and the only solution I could come up with is frankly too complicated to be worth the extra 36ms I gain from it. My code would become complex and partially unreadable and hard to maintain.

Hi. It’s been a while since I looked at your project, and it still makes a good read!

You say something about an ‘event character’ of 0xFE. This sounds dangerous. Presumably, you want your floppy drive to be really flexible (eventually), so people can read (or at least dump) IBM PC, Atari ST and other disks. Also, what about weird copy protection schemes or corrupted data? These questions may apply to some other design decisions, such as the track length. I remember hearing the Amiga checks it has the whole track by reading slightly more and checking for a duplicated sector. Maybe I dreamt that. I’m no expert.

Anyway, great project!

(I’m posting this in a separate comment as it seems to make sense to do so.)

A friend had his (old) university degree project on floppy disks with no backup, and 1 disk became unreadable. He was going to throw the disks out, and I said I’d try to recover it. I used GNU’s ddrescue (under Gentoo Linux) to read as many sectors as possible using a normal Samsung USB floppy drive.

By rereading sectors I managed to recover quite a lot of the disk and most of the project. Then I tried cooling the disk and drive in a freezer (a trick that is supposed to work on dead/dying hard drives) and I could read more. I also heated the disk up in ‘almost kettle-hot’ water (keep the disk dry in some plastic), and I could read even more of the bad sectors. Then I tried cooking the disk in a saucepan and accidentally melted the disk a bit, bending it. I took the magnetic medium out and used kettle-steam to unwarp it, and it was much less readable, but I seemed to get a few more bad sectors off!

Anyway, ddrescue is designed for any block device, and depends on the Linux kernel legacy floppy driver to return the data. If the CRC fails, the Linux legacy floppy driver returns no data at all (and triggers an error). CRCs have their uses, but it would be better if I could at least retrieve corrupted data so I have something closer to the true data. Under FreeBSD, the kernel has a ‘FDOPT_NOERROR’ option that will return data even if the CRC fails, for better error recovery. I checked the Linux kernel source code, and its floppy driver appears to return nothing if the CRC fails. All this stuff applies to legacy internal floppy drives (which I don’t have). So,

1. Can USB floppy drives (using USB mass storage driver I think) be made to return data even on a CRC data error, like legacy floppy drives supposedly can under FreeBSD?

2. Is there software for the classic Amiga to use its floppy drive for advanced data recovery of IBM PC floppy disks? Maybe I could just dump the disk, and since the Amiga doesn’t check IBM disk CRCs, no error actually happens??? I could just reread a few times and average the noisy data of bad sectors???

Thanks for reading everybody!

Sorry! Forget question 2 in my comment above. The PC floppy disk is high density 1.44MB, which won’t work in my Amiga.

GrimRC — sorry about the delay.

I normally see the comments immediately after they post, for whatever I reason I missed yours!!

To address your points:

1. Event character ‘0xFE’: This character is illegal MFM and will not show up on an MFM encoded disk. It doesn’t matter whether it is an IBM disk or otherwise. If it’s encoded MFM, then it shouldn’t happen. I don’t think they changed the actual disk encoding method with any copy protection techniques, but I might be wrong!

BUT, here’s the dealio. Even if they use 0xFE, the byte still passes through. It forces the USB chip to transmit immediately, but that will just cause additional reads, but should still return the right number of bytes etc in a reasonable time period.

Same thing applied to corrupted disks: if its that corrupted ( 0xfe is 1111 1110, which would be really screwy, that’s a ton of back to back to back pulses) then the checksum will fail, and it will retry. This character by no means prevents or terminates (completely) the transfer.

2. Track length: ADF file format only supports 11,968/2 = 5984 bytes/track as far as I know. As a result a different track length is impossible. 11968 = 11 sectors * 1088 raw mfm per sector

) 11968 / 2 raw bytes per data byte= 5984.

3. USB floppy drives: I’m not 100% sure how they work. They are almost definitely regular PC drives with a USB->floppy interface. Probably not entirely unlike my project. Maybe that would be fun. Buy a couple usb floppies and take them apart and reverse engineer them and see how they work. And how they addressed the issues/problems/approaches I’ve seen.

ANYWAYS, the drives themselves do *not* perform any type of checksumming. They simply read the flux transitions off the disk and send them to the controller. Floppy drives are relatively dumb. The controller has to step the head, turn on the spindle motor, etc etc. The controller’s job is to read the data, extract the header information, checksum the track, and determine whether to send the actual data back to the application, or cause the drive to re-read. In essence, for my project anyways, a re-read just means that you listen to the read line a little longer. My motor is always on.

So it’s your CONTROLLER deciding whether to return the data, not the drive itself.

Also, I actually read 1 bit shy of 12 tracks + 1,000 byte gap = 14,055 bytes off the disk. 11,968 is a true full track. I don’t process half-tracks at the beginning and end of a track read. You always(except in the most exception case) start reading in the middle of a track.

I’m actually pretty excited about the future in the aspect you are talking about. Right now, if one sector is bad, I re-read the whole track. If sector 0 was bad the first read, but sector 2 is bad the second read, neither track-read generates a good track. BUT, what if I performed multiple track reads, and only selected the good sectors, and then assembled a fully working track AFTER all the reads.

ALSO — the chance of reading one sector good per track is much greater than reading all good sectors per track. BUT, if I stumble into an impossible to read sector, I could always ZERO OUT the sector (because maybe the sector is blank to begin with!!!) or use intelligent analysis to fake the header of the data portion! So that the disk would checkout ok according to amigados, but the data would be 0’s.

If you happen to catch recent posts, I’ve discussed the possibility of recovering data in (what I think is) a completely new and different way. I can’t find the posts offhand, but I could do tricky tricky things like:

Use the structure of MFM itself to provide error correction and recovery — the clock bits are inserted in a very specific way, and completely consistent. So what this means is

1> Clock bits take up 50% of the data on a floppy.

2> If those some are corrupt, it’s not the end of the world.

3> While I use PLL code to keep in sync based on transitions, a couple missing clock bits is ok. My microcontroller can continue to cycle through at 2us pretty reliably. In the end, bit sync is established by using a sync word.

4> Clock bits get masked off completely. They are unused once past the initial read stage.

5> instead of reading data in BYTES, I could read data in MFM groups. This means that instead of having to know that 1001 0010 is an OK complete byte with clock and data, I know that “100” is valid, “100” is valid, and “10” are all valid MFM groupings. If I see “11” someplace, it’s illegal, and I know approximately where the error occurred.

I need to expand my thoughts on this. In the meantime, DON’T THROW AWAY IMPORTANT BUT CURRENTLY UNREADABLE AMIGA FLOPPIES.

Maybe I’ll expand into other disk formats DOWN THE ROAD.

Thanks for the comment and the participation!!!