So I was wondering exactly how effective the XOR checksum that Commodore used for the amiga sectors was. If I read a sector, and perform the checksum, and the checksum matches the stored checksum, then the data is the same, right? Not always. I had expected it to be better, maybe not MD5 or SHA-1 level, but I expected it to be decent.

I had run into this paper a year ago when I was looking at my transfer checksums. But this certainly also applies to the amiga sectors, too.

Some good excerpts:

- the percentage of undetected two-bit

errors becomes approximately 1/k (k being checksum size), which is rather poor performance for

a checksum (i.e., 12.5% undetected errors for an 8-bit checksum, and 3.125% for a 32-bit checksum). - The XOR checksum has the highest probability

of undetected errors for all checksum algorithms in this study….. - There is generally no reason to continue the common practice of

using an XOR checksum in new designs, because it has the same software computational cost as an

addition-based checksum but is only about half as effective at detecting errors.

So I wanted to actually prove myself that XOR is that bad, looking at it from the amiga sector perspective, so I wrote a quick program to:

- Create a random block of data 512 bytes long

- Calculate the 32-bit XOR checksum based on 32-bit chunks at a time and store it as the last byte (as does the amiga)

- Select a number of bit inversions(basically corrupt the data in a specific way) which can affect data and/or stored checksum

- Recalculate the checksum of the modified block

- If the checksums MATCH, meaning that two different sets of data yield the same checksum, then this is an undetected error.

- Count the number of undetected errors vs total errors.

That paper lists the properties of an XOR checksum, and I wanted to compare results:

- Detects all single-bit errors. (my testing confirms)

- Fails to detect some two-bit errors. (my testing confirms, see below)

- If total number of bit errors is odd, XOR checksum detects it. (confirmed with 1,3,5,7 bit errors)

- Fails to detect any even number of bit errors that occur in the same bit position of the checksum computational block. (confirmed with 2,4,6,8)

The two-bit errors is really the case that I worry about. If two bit errors occur in the same bit position, and the inverted oppositely(1—>0 and 0—>1), then it won’t be detected. So how often does this happen with random data? Paper’s author, Maxino, says 3.125% of the time. I can definitely confirm this. My testing shows 1.8 million hits over 56 million tries. There are some differences with Amiga data, but I think the results can be the same. I might load some example amiga sectors and try them.

Once the number of bit errors increase, the probability of them happening in the same bit position, in the same direction, and occurring evenly on each position goes down —- and therefore the overall chance of undetection decreases as well.

4 bit undetected errors happen 0.3% of the time.

6 bit undetected errors happen 0.04% of the time.

8 bit undetected errors happen 0.009% of the time.

I have other testing to do, including running burst error tests.

So for each sector, you really don’t want to see only two bit errors occur. A single bit error, sure. Multiple bit errors, ok, because we can detect them. You don’t want to think you have a good copy when it fact the checksum passing was a false positive.

That is a good way to illustrate it -I knew it was bad, but that is worse than I thought too. I have heard that you can do integrity checking on the MFM encoding too, though I am not sure how.

Yes, you can definitely integrity check the MFM. I’ve put a fair bit of thought into this.

https://techtravels.org/?p=191

The structure and rules of MFM allow for educated guesses of missing bits.

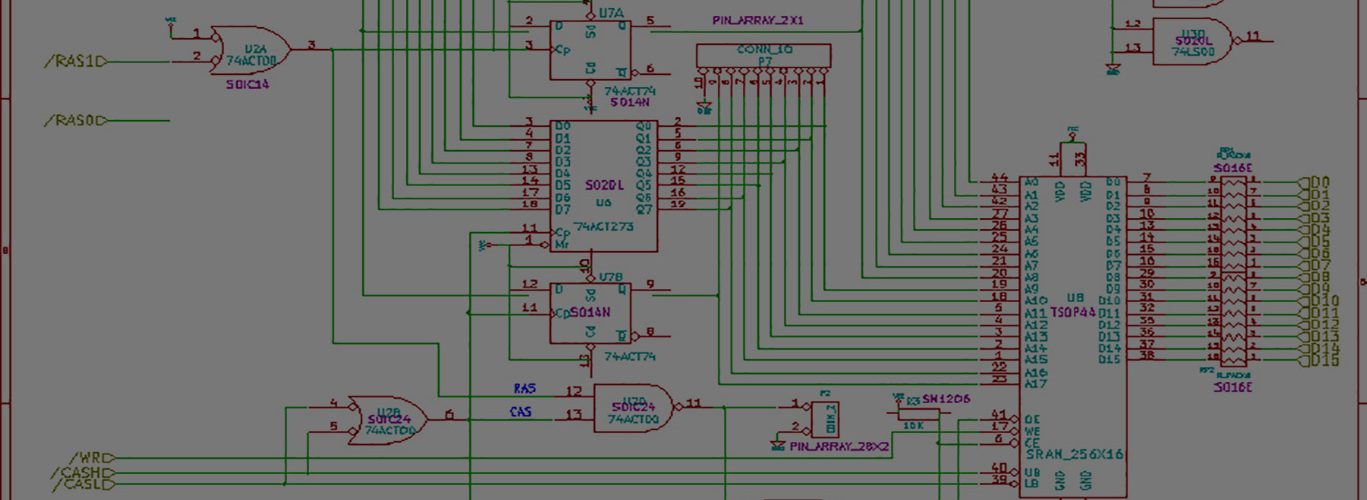

I have revisited these ideas in light of my changes on how the FPGA solution works. I now pass delta T pulse times between the FPGA and the PC. And so the PC constructs the MFM. The PC always creates perfect raw amiga MFM — it can’t create back-to-back-1’s, or too many zeros in a row.

As long as the times are in range, it will produce correct MFM.

So this changes the domain of the problem for me a little bit.

Thanks for the post.

Nice. Thanks for that.

I guess the best solution is a combination of both… and maybe there are other things that can be done too… I guess with data at this level, checking the disk format against AmigaDOS specs is also a bit like an integrity check.

So what are your plans? Is this only a personal hobby project, or are you planning to provide people with the stuff to build their own?

So the file system stuff is another layer up. And I don’t pretend to know how it works, although it is documented pretty well. For me, it’s about whether the data made it cleanly from the disk to the .ADF. I do currently look at and sanity check the sector headers, which are part of the on-disk data. Once the data has been MFM decoded, the .ADF doesn’t contain or hold this sector header info — it’s really only for facilitating the extraction from the disk. If this makes sense, I know my wording is awkward.

The sector header contains

FF = what I call the ffvalue, basically identifies disk as amiga 1.0 format. I’ve never seen a different value.

TT = track number

SS = sector number

SG = number of sectors to the gap. (I don’t use this info currently)

plus

Header checksum

data checksum

The interesting thing about a sector is that the ODD bits and the EVEN bits for each 32-bit word are spread a 1/2 sector apart. So all the ODD bits (with clock interleaved) appear first on the track, and then all the EVEN bits (with clock interleaved) appear last.

This was to help fast decoding using the blitter.

=============

Your question has a complicated answer.

The main reason why I started this project was because I wanted to learn about electronics, digital logic, and so on. I wanted to learn microcontrollers, and I’ve done that. I wanted to learn about how FPGAs work, how to program them, and so on. I’m still working on that. This is really the main goal for me.

HOWEVER, five years ago, I found a need for this tool. I looked at my stack of amiga floppies, and my USB port on my windows machine, and thought, “there’s got to be a way.” And so I’ve created a neat little tool. Sure there are commercial versions. But I wanted to do it myself.

The project from day one has been open source. Now I’ve done a crappy job of putting my code etc online. This is going to change. Part of my reluctance in posting is because I’m an amateur. The java code isn’t clean, it isn’t perfect. I’m brand new (well, 6-8 months of time on the side) to Verilog, so that’s not great either. While everything works, and is written with robustness — it’s sometimes ugly and needs refactored. I’m vowing to make a change. I’m convinced my code and methods can help others, and hiding it away on my harddrive while claiming it’s open-source is hypocritical.

I’d like people to build their own, but there are just some obstacles — and it’s mainly a $$$ thing. My eval board is $169 + $30(add on board) + $20 (usb->ttl) + power supply. You get the picture. The pieces and parts can really add up.

The project doesn’t require much in terms of eval hardware. Basically, just the FPGA, a connector for the I/O pins to the floppy. I don’t use the other connectors/chips. Don’t use the DRAM, the flash, the LCD, the switches, the LEDs (not really), ethernet, VGA, etc. So a cheaper board could be purchased.

I think my project could be ported to cheaper hardware and even Altera or another vendor’s FPGA. I do use a Xilinx coregen-generated FIFO, but the other vendors have that too.

If anyone was serious about taking this on, I’d support them 150% in the build effort. I’ve got to improve my documentation on this too — although I have most of it semi-documented.

1) That makes sense, yes. Thanks for the info. I have read that fully checking the AmigaDOS format is what the SPS guys do, but I guess that is a bit different – a later verification stage.

2) That all sounds good, thanks for your comprehensive answer. These USB devices are starting to become a bit like buses too aren’t they!