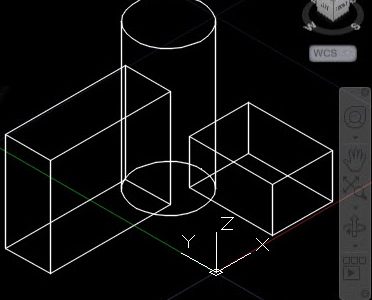

So for my fixed point number format for my 3D project, I’ve come up with a few possibilities :

- I think a 16.16 number format might work for me. This means I’ll represent the whole portion via one 68k word, and the fractional portion with one 68K word.

- It would look like this: <Sign-bit><15-bit integer>.<16-bit integer>

- with using 0 for Sign-bit if the number is positive, and 1 if the number is negative

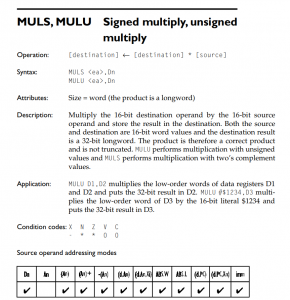

- This allows me to use standard multiplication instructions, like muls.w on the portions that require it. The instruction will do the sign handling.

- My first approach of multiplying these numbers together would be to use the distributive property of multiplication such that you’d end up with:

- (a+b) The whole part + the fractional part of the first number

- (c+d) The whole part + the fractional part of the second number

- So, (a+b)(c+d), would take (4) multiplications, (3) muls.w and a (1) mulu.w.

- This sounds super expensive and just inelegant. Gotta be a better way.

- I’m thinking I can use regular addition and subtraction, with adding/subtracting 1 to the whole part if the fractional part overflows/goes negative. I’ll have to figure out how the CCR flags react in those cases.

- libfxmath looks like it could be so promising. If only I could build C! 🙂

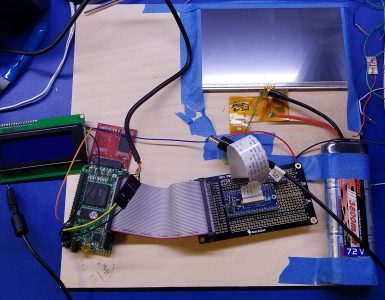

I’m definitely going down the path of working this out. What I really need is an easy way to set multiple memory locations in bulk based on a file. The monitor software I’m using only allows for accessing 64K of RAM, I’m either going to have to extend it, or find something else that will take an SREC or similar format, and allow me to upload it.

Looks interesting! I assume libfxmath doesn’t have any hardware dependencies? Does it rely on the C standard library? If not, you could pretty easily compile it with any generic 68K compiler, and then copy the compiled code into your program.

Will you be using 2’s complement for your signed numbers? I assume yes, since that’s what the 68K signed instructions are designed for, but the . seems to describe something slightly different.

As I understand it, to multiply two 16.16 fixed point numbers, you would ignore the decimal point and treat them as regular 32-bit integers, and multiply them with MULS.L. This produces a 64-bit result. Then you would right-shift the result 16 places, and take the bottom 32 of the 48 remaining bits, to get the result in 16.16 format. Except I now see that MULS.L is only available on 68020 and later.

Unless it’s a simple routine with minimal function calling, argument passing, local memory usage, and so on — using compiled C is not exactly trivial. There’s problems associated with doing so. Snippets of self- contained code work well enough.

Yes, regarding 2’s compliment. Easy enough to convert, but yeah, necessary for the sign handling code and other parts of the muls instruction!

Trust me, I definitely thought of essentially promoting or treating the fractional part as just the lower order bits of a bigger number to multiply. Yeah, you got it — it takes two words and produces a longword.

Thanks again!

Here’s a code example for 32-bit unsigned multiplication for 68000: https://stackoverflow.com/questions/34569308/multiplying-two-long-words-on-68000-assembler

Thanks for the link. It sounds similar to what I planned.

Following the letter conventions I had in the post: I need to do this

Multiply A*C and add

Multiply A*D and add

Multiply B*C and add

Multiply B*D. (Since both b and d are fractional — the sign is owned by A and C, so the last operation can be a cheaper mulu.

I stumbled on a newer version of this: https://opencores.org/project/verilog_fixed_point_math_library/manual

It did occur to me that the raw intrinsic value associated with each vector is unimportant. It’s only maintaining the distance between vectors in a linear fashion. The only thing that matters is the scaling factor come display time.

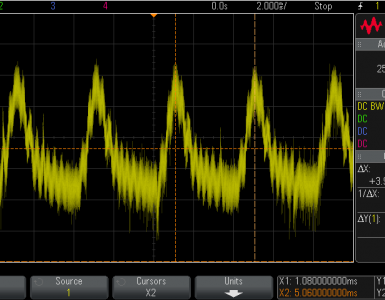

I do wonder if doing this in hardware might be amazingly fast. There is one problem, memory accesses on this J68 core seem to have a fixed time despite the actual access time. I could get this down to a couple clocks, but it might not matter. Alternatively I gotta hack the core in some way which would not be trivial.

Thanks!